If you have a system machine generating various cryptographic keys, you really need a non predictable state in your entropy pool. To reach a satisfied level of unpredictability, the Linux kernel gathers environmental information in order to feed this famous entropy pool. Of course gathering enough unpredictable information from a deterministic system, it's not a trivial task.

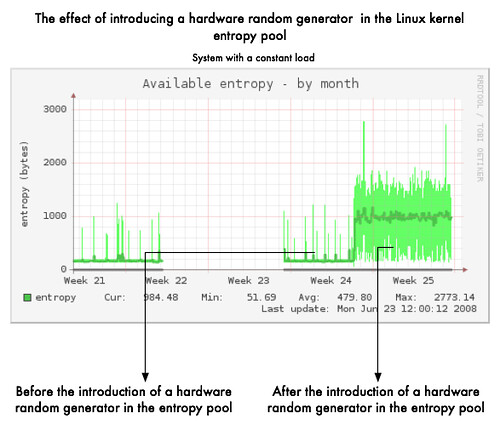

In such condition having an independent random source is very useful to improve unpredictability of the random pool by feeding it continuously. That's also avoid to have your favourite cryptographic software stopping because lacking of entropy (it's often better to stop than generating guessable keys). In the graph below you can clearly see the improvement of the entropy availability. On an idle system, it is difficult for the kernel random generator to gather noise environment as the system is going in a deterministic way while doing "near" nothing. Here the hardware-based random generator is feeding regularly the entropy pool (starting end of Week 24) independently of the system load/use.

If you are the lucky owner of a decent Intel motherboard, you should have the famous Intel FWH 82802AB/AC including a hardware random generator (based on thermal noise). You can use tool like rngd to feed in secure way the Linux kernel entropy pool. In a secure way, I mean really feeding the pool with "unpredictable" data by continuously testing the data with the existing FIPS tests.

That's the bright side of life but I would close this quick post with something from the FAQ from OpenSSL :

1. Why do I get a "PRNG not seeded" error message? ... [some confusing information] All OpenSSL versions try to use /dev/urandom by default; starting with version 0.9.7, OpenSSL also tries /dev/random if /dev/urandom is not available. ... [more confusing information]

If I understood the FAQ, by default OpenSSL is using /dev/urandom and not /dev/random first? If your entropy pool is empty or your hardware random generator is not active, OpenSSL will use the unlimited /dev/urandom version and could use information that could be predictable. Something to remember if your software is still relying on OpenSSL.

Update on 03/08/2008 :

Following a comment on news.ycombinator.com, I tend to agree with him that my statement "/dev/urandom is predictable" is wrong but that was a shortcut to urge people to use their hardware random generator. But for key generation (OpenSSL is often used for that purpose), the authors of the random generator (as stated in section "Exported interfaces —- output") also recommend to use /dev/random (and not urandom) when high randomness is required.

That's true when you are running out of entropy, you are only depending of the SHA algorithm strength but if you are continuously feeding the one-way hashing function with a "regular pattern" (another shortcut). You could start to find problem like the one in the linear congruential generator when the seed is one character… But that's true the SHA algorithm is still pretty secure. So why taking an additional (additional because maybe the hardware random generator is already broken ;-) risk to use /dev/urandom if you have already a high-speed hardware random generator that could feed nicely /dev/random?